“I believe this not only damages my own interests but, more importantly, harms public interests, especially for seniors who prioritize health and are drawn to various health remedies. I urge elderly friends to be cautious and verify the source before making purchases. Ask yourself, is this person an expert in this field? For example, I'm a policy expert, not a medical specialist, so I would never recommend such treatments.”

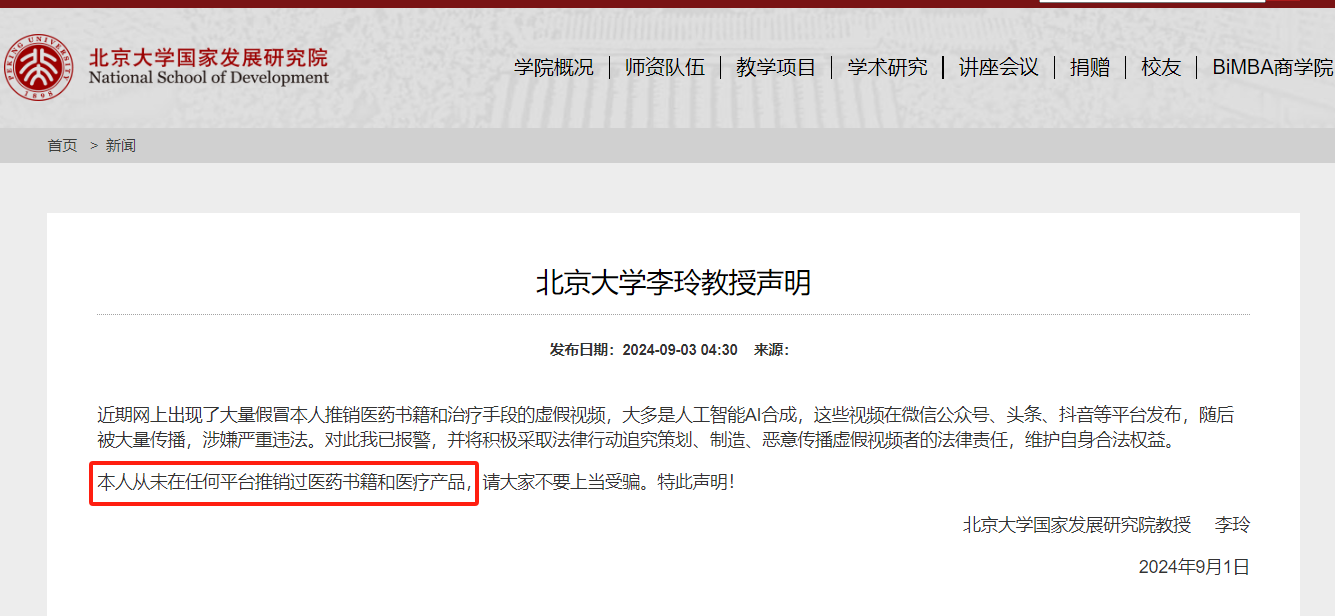

On September 1, Professor Li Ling from the National School of Development at Peking University issued a public statement saying, “Recently, numerous fake videos have appeared online, falsely using my image to promote medical books and treatment methods. These videos are largely AI-generated. I have never endorsed any medical books or products on any platform. Please be aware of this scam.” She has since reported the matter to the police.

Celebrities hold significant social influence and public trust. Scammers use the reputations of well-known individuals, like Professor Li, to create trust in their fake endorsements, especially among seniors concerned with health. By cloning her likeness with AI, fraudsters present a believable endorsement, making it harder for elderly viewers to doubt its authenticity. Apart from Professor Li, famous figures like Scarlett Johansson, Kylie Jenner, Taylor Swift, and Tom Hanks have also been impersonated in similar scams.

The Scam Process in Fake Celebrity Ads

Fraudsters rely on AI technology to create fake celebrity advertisements in a multi-step scam, from data collection and AI-generated videos to ad distribution and tricking victims into transferring money. By exploiting celebrity trust and technology, they create highly convincing scams.

-

Data Collection: Fraudsters begin by gathering public information on the targeted celebrity. This includes photos, videos, public speeches, interviews, and social media posts, all of which provide material for AI-generated content. For Professor Li’s case, scammers likely obtained footage of her public speeches or academic lectures to capture her voice, tone, and facial expressions.

-

Fabrication of AI-Generated Audio and Video: With sufficient material, scammers use AI to synthesize audio and video. AI technology can accurately replicate a person’s voice and facial expressions to create realistic fake advertisements. In Professor Li’s case, the fraudsters likely produced a video where she appears to endorse health products or medical books she has never promoted.

-

Spreading Fake Ads: The resulting fake video is then spread across various online platforms, such as short video apps, social media, and group chats. These platforms are frequently accessed by older adults, the scammers’ primary target audience. Using targeted marketing, the fake ads reach specific groups. Due to the striking similarity of these videos to genuine content, viewers are easily deceived.

-

Creating a Sense of Urgency and Authority: To boost their success rate, scammers often use scare tactics or emphasize the authority of the content. For example, they might exaggerate the risks of certain diseases or claim the promoted product is a “limited-time offer” or “exclusive.” For health-conscious seniors, fraudsters may even fake expert qualifications, fabricating scientific evidence to strengthen trust.

-

Inducing Purchases: When convinced, the victim is prompted to buy the fake product through links or customer service hotlines embedded in the video. In Professor Li’s case, scammers might include a link or QR code in the video, prompting victims to pay for bogus “medical books” or “treatment products.” Often, these products are either non-existent, of poor quality, or completely worthless, resulting in financial loss for the victim.

How Ordinary People Can Identify Fake Celebrity Ads

Scammers leverage AI to create fake celebrity endorsements, following a multi-step process: data collection, synthetic audio/video production, ad distribution, and finally, persuading victims to transfer funds. How can regular people detect these fake celebrity endorsements?

- Be skeptical of shocking or “too good to be true” statements, especially involving celebrities. Always apply common sense and logic.

- Look for unnatural features in the content, like odd blinking patterns, mismatched audio, or distorted body parts, as these could signal deepfakes.

- Cross-check statements with reliable news media and websites. Don’t believe content without fact-checking first.

- Before making a purchase or decision, consult friends, family, or colleagues for advice and do some research. Don’t take the initiator’s words at face value, even if it’s someone like Elon Musk.

- Avoid commenting, sharing, or even clicking on unverified social media posts. Interacting with accounts impersonating celebrities increases your scam risk.

- Never share personal details with unverified individuals, websites, or apps.

Platform Measures Against Fake Celebrity Ads

For fake celebrity ads and information on social media platforms, the Dingxiang Defense Cloud Business Security Intelligence Center suggests precise identification of fraudulent accounts as the first step in effective fraud prevention.

-

Identify abnormal devices. Dingxiang Device Fingerprinting helps distinguish legitimate users from potential fraudsters by recording and comparing device fingerprints. It uniquely identifies devices, detecting malicious use of virtual machines, proxy servers, emulators, etc., while analyzing behaviors like multi-account logins, frequent IP address changes, and device property changes that don’t match normal user patterns.

-

Detect suspicious account operations. Unusual activities like logging in from different locations, switching devices, changing phone numbers, or sudden activity on dormant accounts require stronger verification. Continuous identity verification throughout sessions is essential. Dingxiang’s “No Touch” verification can quickly and accurately distinguish human users from bots, helping real-time monitoring and blocking of abnormal behavior.

-

Prevent face-swapped fake videos. Dingxiang’s full-spectrum facial security threat detection solution utilizes multi-dimensional data, including device environment, facial information, image authenticity, user behavior, and interaction state for intelligent verification. It quickly detects and blocks over 30 types of malicious behavior, including injection attacks, live forgeries, image forgery, camera hijacking, debugging risks, memory tampering, rooting/jailbreaking, malicious ROMs, and emulator usage. This solution flexibly adjusts video verification strength to allow seamless verification for legitimate users while increasing security for suspicious accounts.

-

Identify potential fraud threats. Dingxiang Dinsight aids businesses in risk assessment, anti-fraud analysis, and real-time monitoring, enhancing risk control efficiency and accuracy. Dinsight processes daily risk control strategies in less than 100 milliseconds on average, supports multi-party data integration, and leverages established indicators, strategies, and deep learning technologies for self-monitoring and iterative risk control. Coupled with the Xintell intelligent modeling platform, it automatically optimizes security strategies for known risks, configuring different scenarios to support risk control strategies. Using associative networks and deep learning, it standardizes complex data processing, feature derivation, model building, and deployment for an end-to-end modeling service.