A recent CCTV report highlighted a concerning case of "AI face change" video conference fraud in Hong Kong, amounting to HK$200 million (approximately US$25 million). This case involved the use of deep forgery technology, a form of artificial intelligence.

The fraudsters meticulously targeted a company by:

The fraudsters meticulously targeted a company by:

Collecting data: They gathered face and voice data of the target company's senior management personnel beforehand. "Face-changing": Using AI technology, they superimposed the stolen data onto their own faces, creating a seemingly real video conference. This deceptive tactic ultimately deceived the company's employees into transferring HK$200 million to the fraudsters' accounts.

On February 5, the Hong Kong Police Force disclosed the case and urged the public to be vigilant. Subsequently, on February 7, Dingxiang Defense Cloud Business Security Intelligence Center provided an analysis of the case, offering several effective security detection and prevention methods.

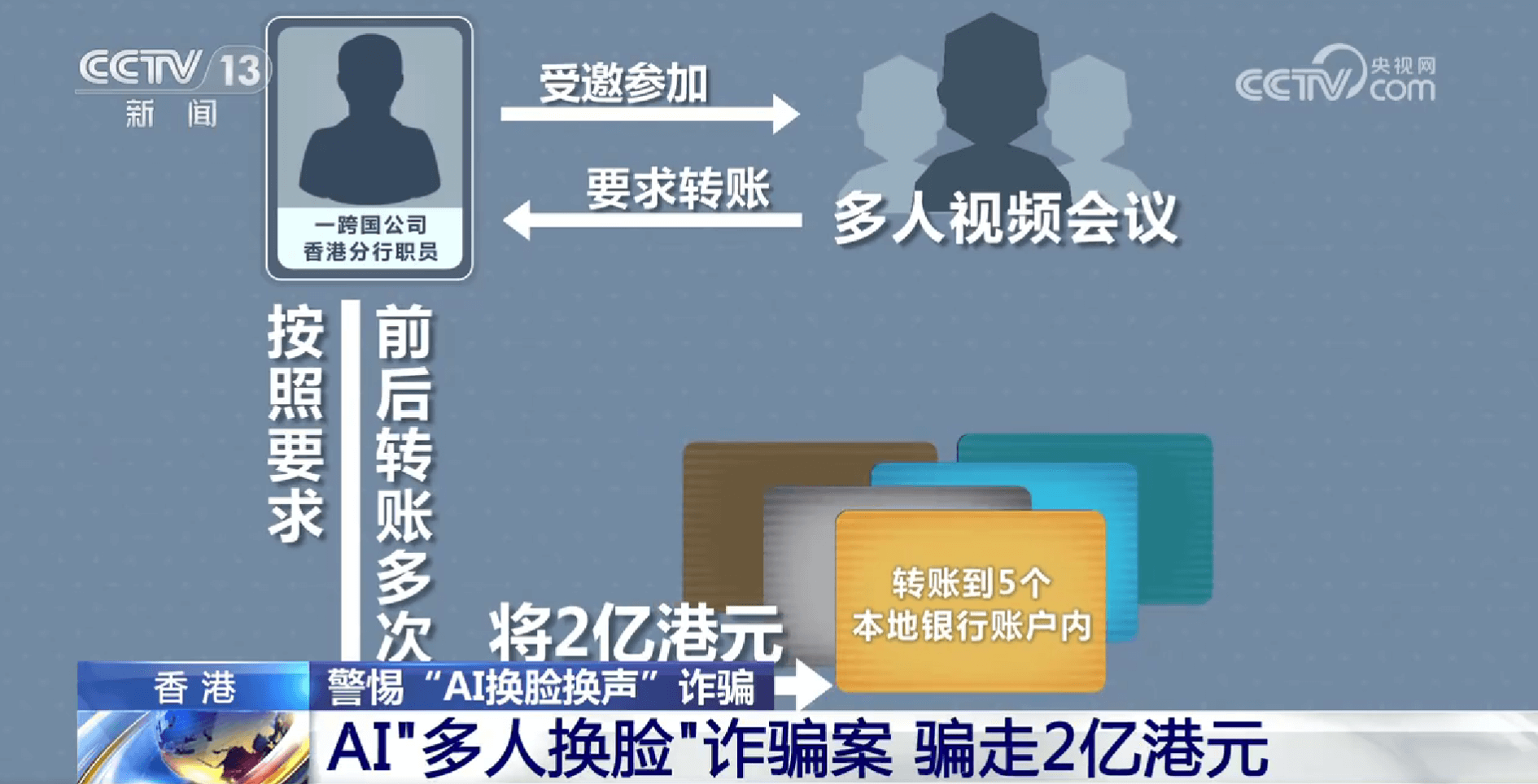

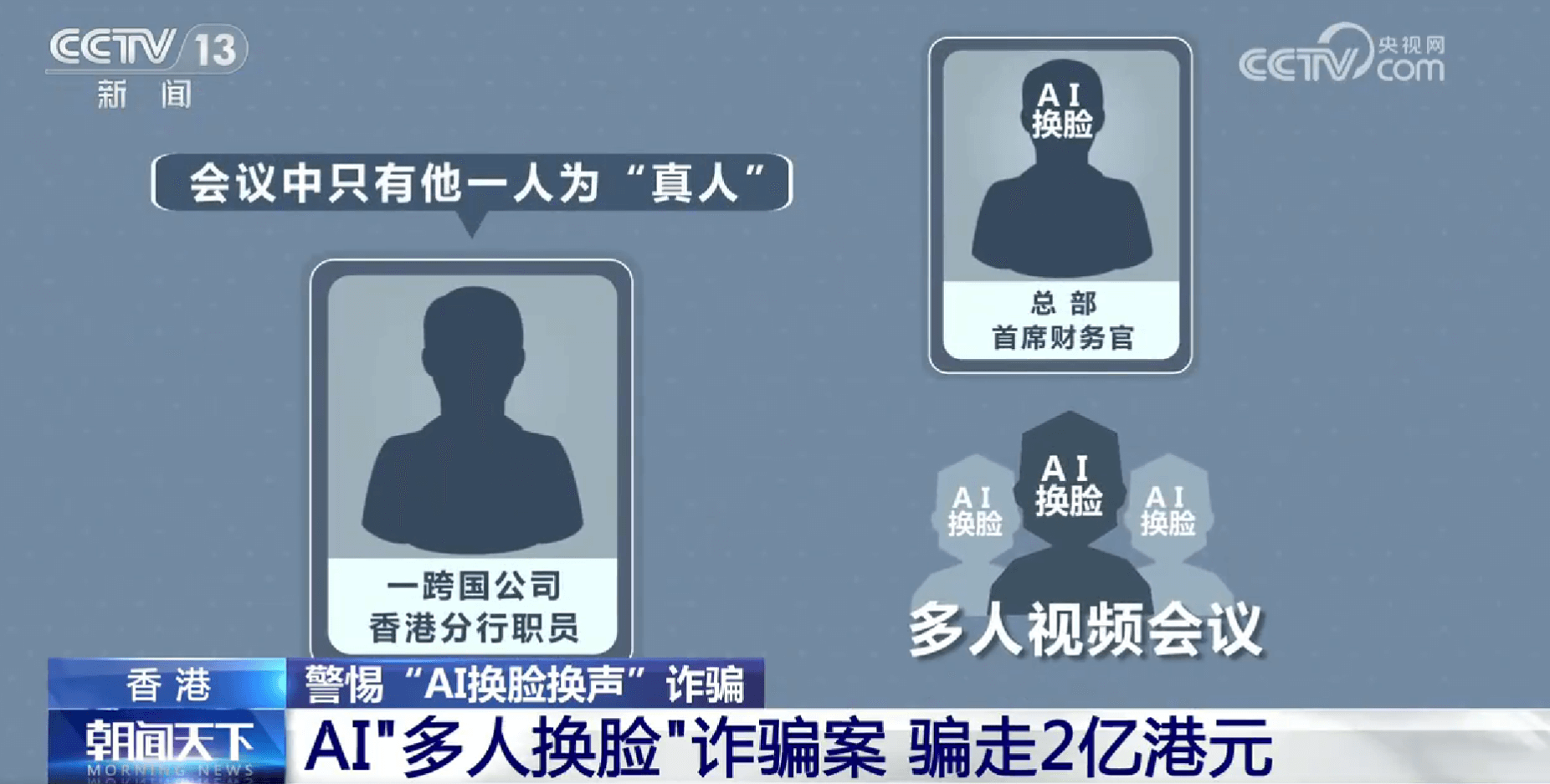

The scam began in January 2024 when a company employee received an email supposedly from the company's Chief Financial Officer (CFO) at headquarters. The email initiated a video call, inviting the targeted employee and several "colleagues" from various locations to participate. During the video conference, the employee, misled by the seemingly familiar appearance and voices, dismissed his initial suspicions.

Following the meeting, the employee, under the instructions of the fraudulent "CFO," made 15 transactions within a week, totaling HK$200 million. In maintaining communication, the fraudsters employed various channels: WhatsApp, email, and individual video calls. Finally, after reporting the transactions to company officials, the employee realized he had been tricked.

The Hong Kong police investigation revealed an intricate scheme. The fraudsters scoured social media and video platforms, acquiring photos and videos of the CFO and other individuals. Utilizing deep forgery technology, they then manipulated voices and combined them with the stolen visuals to fabricate the fraudulent video. Notably, except for the targeted employee, all participants in the video conference were deepfakes. The transferred funds were distributed across five local bank accounts and swiftly dispersed further, making recovery difficult.

The Hong Kong police investigation revealed an intricate scheme. The fraudsters scoured social media and video platforms, acquiring photos and videos of the CFO and other individuals. Utilizing deep forgery technology, they then manipulated voices and combined them with the stolen visuals to fabricate the fraudulent video. Notably, except for the targeted employee, all participants in the video conference were deepfakes. The transferred funds were distributed across five local bank accounts and swiftly dispersed further, making recovery difficult.

This case underscores the growing sophistication of cybercrime and the importance of implementing robust security measures to combat such threats. By remaining vigilant and adopting recommended security practices, individuals and organizations can better protect themselves from falling victim to similar scams.

How to Spot "Face-Swapping" Impostors in Video Calls

This fraud case serves as a warning to both businesses and individuals. Deepfakes have become so sophisticated that they are undetectable to the naked eye. AI-generated fake identities can be very difficult to recognize unless you have been specifically trained to spot them. The increasing quality of deepfakes poses a significant challenge for detection. Not only are they difficult to identify with the naked eye, but some conventional detection tools also fail to timely detect them. When faced with similar "video" scenarios, businesses and individuals should be vigilant and adopt a multi-pronged strategy to identify and defend against deepfake fraud.

During video calls:

Ask the other person to touch their nose or face to observe facial changes. A real nose will deform when pressed. Ask the other person to eat or drink to observe facial changes. Ask them to do strange actions or expressions, such as waving or making a difficult gesture. This can disrupt the facial data and cause some. Jitter, flicker, or other anomalies. In a one-on-one conversation, ask questions that only the other person would know to verify their identity. Always verify any requests for money transfers by calling or otherwise contacting the person through a different channel. In addition:

Face anti-fraud systems that combine manual review with AI technology can help businesses improve their anti-fraud capabilities.

The DingXiang full-link panoramic face security threat Perception scheme can effectively detect and identify fake videos. It performs intelligent risk assessment and risk rating on user face images through multi-dimensional information such as face environment monitoring information, liveness detection, image forgery detection, and intelligent verification. It can quickly identify fake authentication risks.

The DingXiang full-link panoramic face security threat awareness solution provides real-time risk monitoring for face recognition scenarios and key operations, targeted at behaviors such as camera hijacking, device forgery, and screen sharing, and triggers an active defense mechanism for disposal.

Upon detecting fake videos or abnormal face information, the system supports automatic execution of defense. Strategy. The corresponding defense measures will be taken after the device hits the defense strategy, which can effectively block risky operations.

Reduce or avoid sharing sensitive information such as account services, family members, travel, and work positions on social media. This prevents fraudsters from downloading and using the information to deep forge pictures and sounds, and then identity forgery.

Continuously educate the public about deepfake technology and its associated risks. Stay up-to-date on the latest developments in AI and deepfake technology and adjust your security measures accordingly.

The DingXiang full-link panoramic face security threat Perception scheme can effectively detect and identify fake videos. It performs intelligent risk assessment and risk rating on user face images through multi-dimensional information such as face environment monitoring information, liveness detection, image forgery detection, and intelligent verification. It can quickly identify fake authentication risks.

The DingXiang full-link panoramic face security threat awareness solution provides real-time risk monitoring for face recognition scenarios and key operations, targeted at behaviors such as camera hijacking, device forgery, and screen sharing, and triggers an active defense mechanism for disposal.

Upon detecting fake videos or abnormal face information, the system supports automatic execution of defense. Strategy. The corresponding defense measures will be taken after the device hits the defense strategy, which can effectively block risky operations.

Reduce or avoid sharing sensitive information such as account services, family members, travel, and work positions on social media. This prevents fraudsters from downloading and using the information to deep forge pictures and sounds, and then identity forgery.

Continuously educate the public about deepfake technology and its associated risks. Stay up-to-date on the latest developments in AI and deepfake technology and adjust your security measures accordingly.