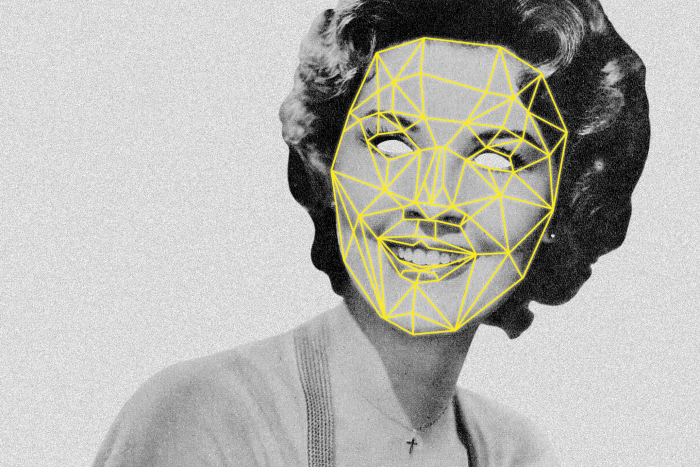

Deepfake is a technology that utilizes artificial intelligence to replace one person's face with another in images or videos. The "Deepfake" technique involves various technologies and algorithms that can generate highly realistic visuals. By combining elements of Deepfake's false content with genuine information, it can be used for identity forgery, spreading misinformation, creating fake digital content, and various forms of deception.

According to a report by KPMG, there has been a 900% increase in online-provided Deepfake videos. Additionally, research by Home Security Heroes found that out of 95,820 Deepfake online videos, 98% were categorized as pornographic content. Furthermore, 99% of these Deepfake videos featured women, with 94% of them involving individuals from the entertainment industry. A study by cybersecurity provider ESET revealed that 61% of women in the UK are concerned about becoming victims of Deepfake pornography.

A special edition o "DEEPFAKE Threat Study and Security Strateg" egy" released by the Dingxiang Defense Cloud Business Security Intelligence Center highlights that another major factor contributing to the proliferation of Deepfake is the accessibility of tools that make creating fake videos and images easier. Home Heroes' survey indicates that there are currently at least 42 user-friendly tools available for making Deepfake, with these tools being searched online tens of millions of times each month. Furthermwith the emergence of Cybercrime-as-a-Service, ordinary individuals can easily purchase Deepfake services or technolo.logy.

Security Measures: How to Prevent the Spread of AI-Generated Pornographic Videos?

Attackers employ various mediums such as social media, email, remote meetings, online recruitment, and news sources to conduct a range of Deepfake fraudulent attacks on both enterprises and individuals.

"DEEPFAKE Threat Study and Security Strateg"egy" intelligence digest suggests that combating Deepfake fraud requires effective identification and detection of Deepfake manipulated content, as well as measures to prevent the exploitation and dissemination of Deepfake fraud. This not only demands technical countermeasures but also potentially involves complex psychological tactics and raising public awareness of safety. Additionally, regulatory bodies need to impose penalties on producers of "Deepfake" pornography.

Individuals should take precautions to prevent the theft and replication of their information.

Avoid sharing personal photos, voice recordings, and videos on social media platforms, minimize the disclosure of sensitive information such as account details, family members, travel schedules, and job positions, to mitigate the risk of fraudsters stealing and forging identities. In the event of identifying false Deepfake videos and images, promptly report them to social media administrators and law enforcement agencies.

AI tools can enhance facial security usage.

The "European Union Artificial Intelligence Act" mandates that providers of AI tools have an obligation to design these tools in a manner that permits detection of synthetic/fake content, adding digital watermarks, and timely restricting the use of digital identity cards for identity verification purposes. The principle behind AI watermarking involves embedding unique signals into the output of artificial intelligence models, which can be images or text, aimed at identifying the content as AI-generated to aid in effective recognition by others.

Social media platforms should restrain the dissemination of pornographic videos.

Social media platforms typically position themselves merely as conduits for content. The Australian Competition and Consumer Commission (ACCC) believes that platforms like Facebook should be held accountable as accessories to frauds and should promptly remove Deepfake content used for fraudulent purposes.

YouTube stipulates that anyone uploading videos to the platform must disclose certain uses of synthetic media to inform viewers that what they are witnessing is not genuine, thereby reducing the fraudulent exploitation of Deepfake videos. Similarly, on Facebook, any content generated by Deepfake may display an icon clearly indicating that the content is AI-generated.

Media services should enhance facial application security.

The Dingxiang Comprehensive Face Security Threat Perception Solution is capable of effectively detecting and identifying false Deepfake videos and images. It conducts real-time risk monitoring for facial recognition scenarios and critical operations (such as hijacked cameras, device forgery, screen sharing, etc.), then verifies through multi-dimensional information such as facial environment monitoring, liveness recognition, image authentication, and intelligent verification. Upon discovering forged videos or abnormal facial information, it can automatically block abnormal or fraudulent operations.

Establishing a multi-channel, full-scenario, multi-stage protection security system.

Covering various channel platforms and business scenarios, providing security services such as threat perception, security protection, data precipitation, model construction, strategy sharing, etc., can meet different business scenarios, possess industry-specific strategies, and achieve precipitation and iteration based on its own business characteristics, enabling precise platform prevention and control, thereby effectively addressing Deepfake attacks and providing personalized protection. Dingxiang's latest upgraded anti-fraud technology and security products build a multi-channel, full-scenario, multi-stage security system for enterprises to systematically combat new threats brought by AI, including: iOS reinforcement with adaptive confusion reinforcement, seamless verification capable of generating unlimited images, device fingerprints supporting cross-platform unified device information, and Dinsight risk control engine capable of intercepting complex attacks and uncovering potential threats.

Regulatory bodies should strengthen penalties for the production and dissemination of Deepfake pornography.

Imposing legal consequences on individuals who create or disseminate Deepfake manipulated content can prevent the spread of harmful content and hold perpetrators accountable for their actions. Qualifying Deepfake fraud as a criminal offense can serve as a deterrent, preventing the misuse of this technology for fraudulent or other malicious purposes. It is an effective method to mitigate the harmful impacts of Deepfake technology, and those who intentionally create or promote harmful Deepfake dissemination should be subject to criminal penalties.