Ms. Li, a resident of Ordos City, Inner Mongolia, recently fell victim to a new telecommunications fraud scheme. Fraudsters utilized DEEPFAKE technology to synthesize a video call resembling her old classmate, which became a crucial tool in deceiving Ms. Li's trust.

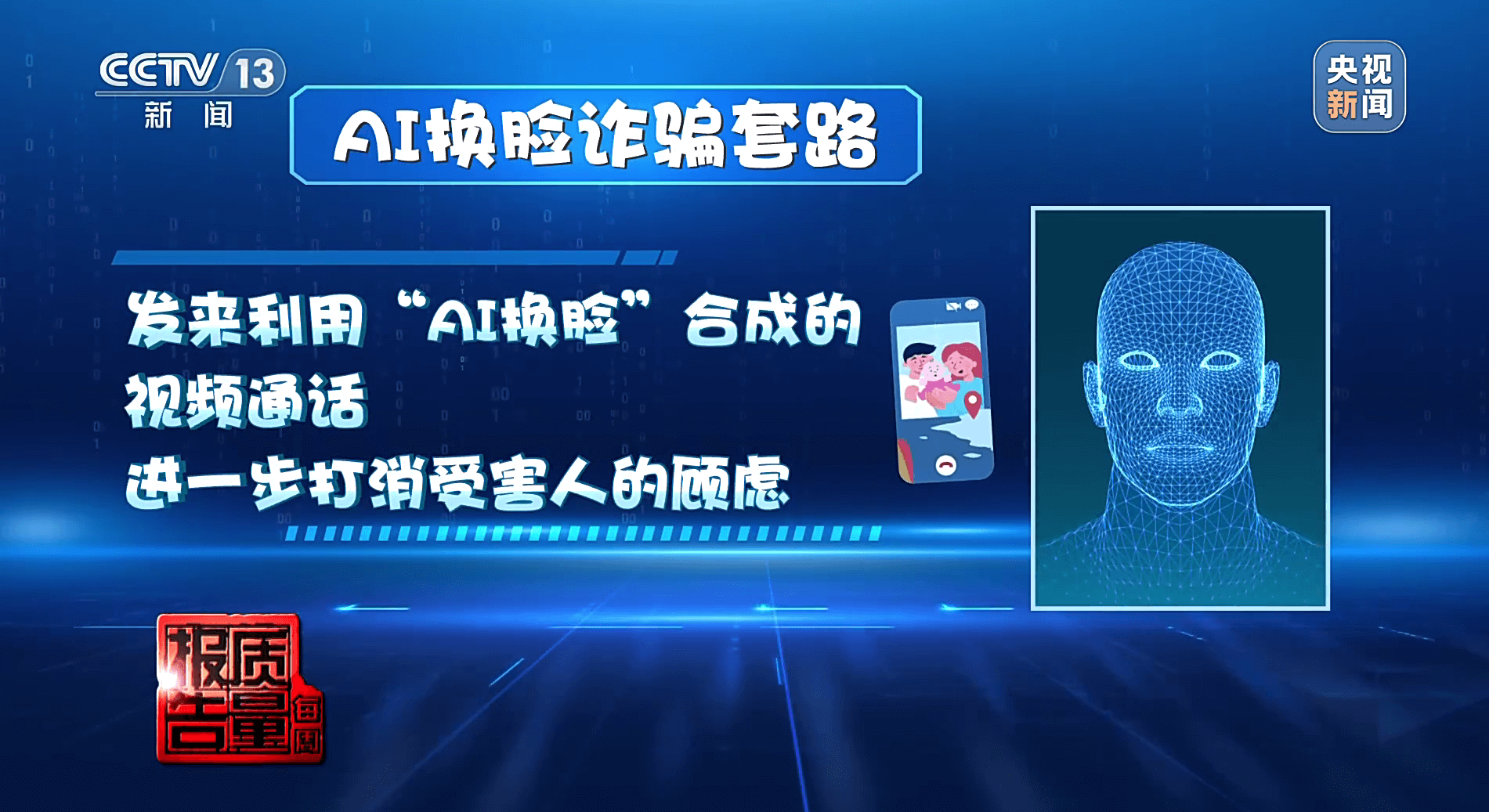

The impostor pretended to be Ms. Li's old classmate "Jia," establishing contact via WeChat and QQ platforms and using DEEPFAKE technology to forge a video call, successfully gaining Ms. Li's trust.

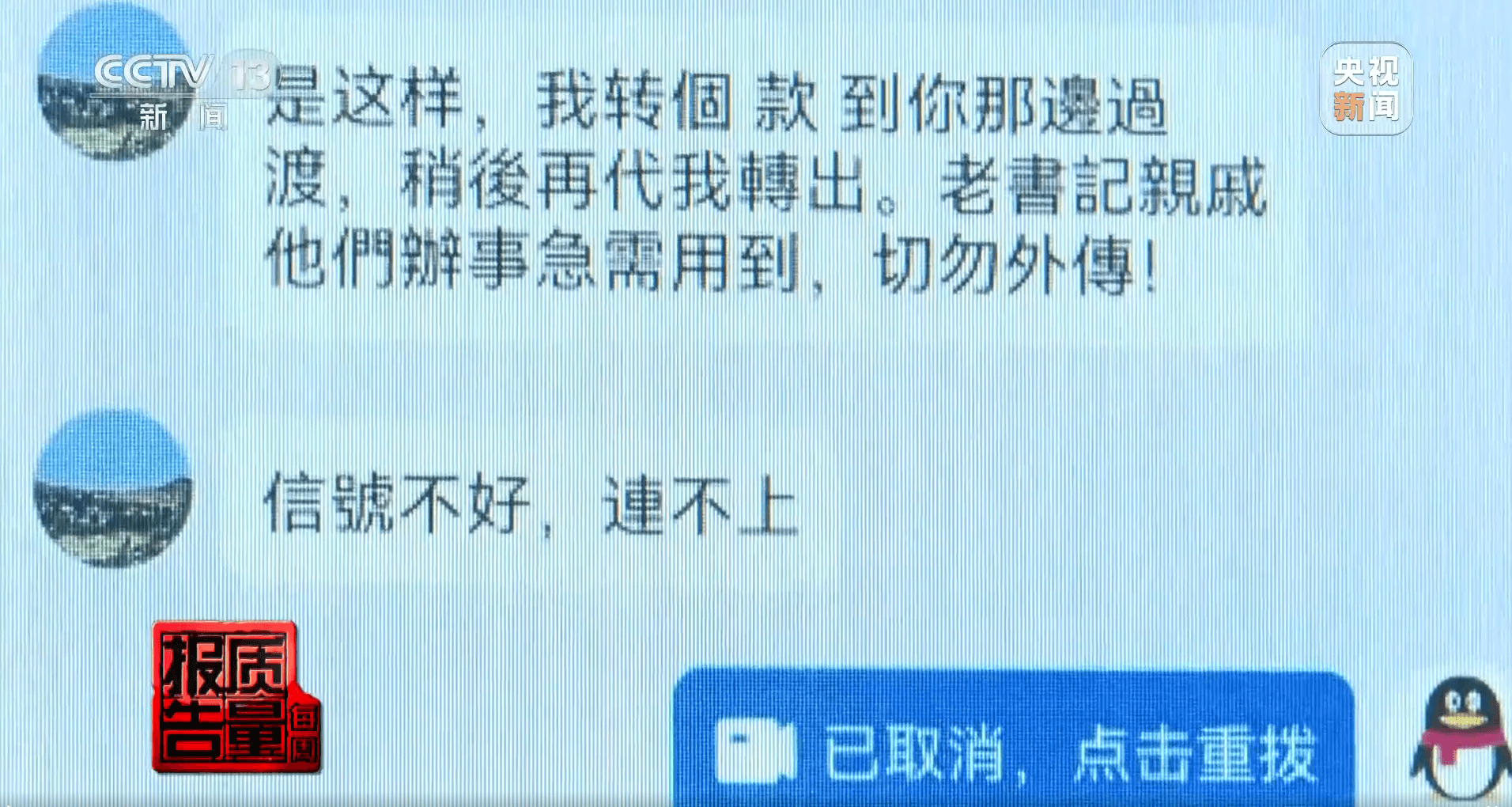

Ms. Li received a friend request on WeChat from an account with the nickname and profile picture matching her old classmate "Jia." After briefly confirming the identity through a QQ video call, the fraudsters claimed they needed money urgently and asked Ms. Li to transfer funds, providing a fake bank transfer screenshot. Without verifying the receipt, Ms. Li transferred 400,000 RMB into the fraudster's account. When they demanded more transfers, Ms. Li realized she might be deceived, immediately contacting her real old classmate and reporting the incident to the police.

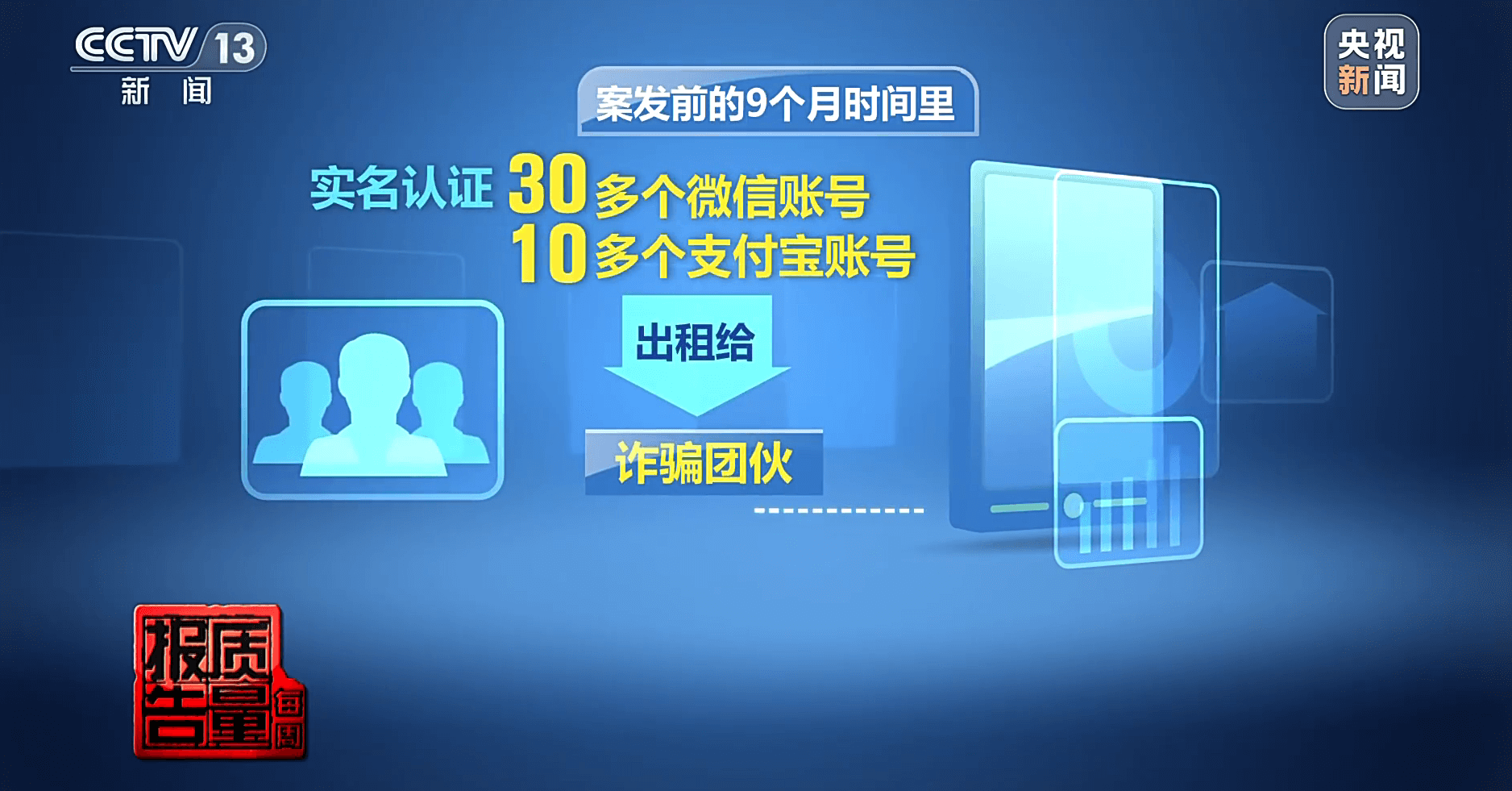

The Ordos police swiftly activated emergency measures to freeze the transaction and successfully returned the 400,000 RMB to Ms. Li. Through investigation, it was found that the WeChat account in question was linked to a person named Liu, who had registered 12 WeChat accounts under his name, engaging in suspicious activities. Liu eventually admitted to renting out his WeChat account to the fraudsters after seeing an opportunity in a QQ part-time job group about "earning money by renting out verified social media accounts."

Mr. Li from Hebei also encountered a DEEPFAKE-based new telecommunications fraud case, where fraudsters induced him to engage in explicit conversations via a dating app, leading to the download of illegal chat software. They used AI technology to fabricate a pornographic video and extorted 120,000 RMB from him.

Mr. Li from Hebei also encountered a DEEPFAKE-based new telecommunications fraud case, where fraudsters induced him to engage in explicit conversations via a dating app, leading to the download of illegal chat software. They used AI technology to fabricate a pornographic video and extorted 120,000 RMB from him.

Frauds Utilizing DEEPFAKE Technology

These incidents underscore the application of DEEPFAKE technology in fraudulent activities and the murky gray industry behind it.

Firstly, fraudsters target individuals on dating platforms using tactics like explicit conversations to lure victims into downloading illegal chat software, thereby illegally obtaining their contact lists, photo libraries, and other private information.

Secondly, DEEPFAKE technology captures facial features of victims through software, combining AI synthesis techniques to create fabricated video content.

Lastly, fraudsters utilize custom apps to distribute these videos, carrying out extortion and fraud.

The new telecommunications fraud has a complete industrial chain: upstream involves illegally obtaining citizens' private information, midstream utilizes custom scripts for fraud, and downstream involves money laundering and cashing out. Citizens' personal information is priced and sold on the dark web, with illegal links, QR codes, and apps becoming primary means of information extraction.

Technical Measures to Prevent New Telecommunications Fraud Using DEEPFAKE

To prevent DEEPFAKE-based new telecommunications fraud, it is essential to detect suspicious activities online and verify offline, increase communication time, and take probing measures such as requesting specific actions from the other party to expose potential loopholes. Additionally, it is recommended that enterprises adopt multiple technologies and methods. Furthermore, promoting the positive application of AI technology and harshly cracking down on criminal activities are fundamental solutions.

1. Identification of DEEPFAKE fraudulent videos:

During video chats, request the other party to press their nose or face to observe facial changes. A real person's nose will deform when pressed. Alternatively, ask them to eat or drink and observe facial changes. Requesting unusual actions or expressions, such as waving or performing difficult gestures, can also help distinguish authenticity. These actions may cause facial data interference, resulting in slight tremors, flickers, or other anomalies during waving.

2. Comparison and identification of device information, geolocation, and behavioral operations can detect and prevent abnormal activities

. Dingxiang Device Fingerprinting identifies legitimate users and potential fraudulent behaviors by recording and comparing device fingerprints. This technology uniquely identifies each device, distinguishing manipulated devices like virtual machines, proxy servers, and emulators. It analyzes behaviors such as multiple account logins, frequent IP address changes, and device attribute changes that deviate from normal user habits, aiding in tracking and identifying fraudsters' activities.

3. Enhanced verification for actions such as remote login from different locations, device changes,

phone number changes, and sudden activity spikes is crucial. Continuous identity verification during sessions is vital to ensure consistency throughout user interactions. Dingxiang atbCAPTCHA accurately distinguishes between human operators and machines, precisely identifying fraudulent behaviors, and monitors and intercepts abnormal activities in real-time.

4. AI technology and human verification systems for facial anti-fraud are employed to prevent DEEPFAKE fake videos and images.

Dingxiang's comprehensive facial security threat perception solution employs multi-dimensional verification based on device environment, facial information, image authentication, user behavior, and interaction status. It swiftly identifies various malicious activities such as injection attacks, live forgery, image forgery, camera hijacking, debugging risks, memory tampering, Root/jailbreak, malicious ROMs, and emulators, automatically blocking operations upon detecting fabricated videos, fake facial images, or anomalous interaction behaviors. The system can dynamically adjust video verification strength and user-friendliness, implementing a mechanism for enhanced verification of abnormal users while employing atbCAPTCHA for regular users.

5. Unearthing potential fraud threats and thwarting complex DEEPFAKE attacks is achieved through

Dingxiang Dinsight, which aids enterprises in risk assessment, anti-fraud analysis, and real-time monitoring to enhance risk control efficiency and accuracy. Dinsight processes daily risk control strategies in less than 100 milliseconds, supports configurable access and deposition of multi-party data, and optimizes security policies automatically based on known risks using risk logs and data mining. The Xintell intelligent model platform, in conjunction with Dinsight, enables automatic optimization of security strategies for various scenarios based on mature indicators, strategies, models, and deep learning technology, standardizing complex data processing, mining, and machine learning processes from data handling and feature derivation to model construction and deployment.