With the widespread adoption of AI technology, extracting sufficient voice samples of a person can enable the "cloning" of their voice to create AI voice products. This technological advancement also poses challenges to the protection of voice rights.

The first nationwide AI voice infringement case, judged by the Beijing Internet Court, has sparked widespread attention to the boundaries of AI technology application. The case involves Ms. Yin, whose voice was cloned and commercialized without authorization.

A Legal Case of Voice Cloning

Ms. Yin is a professional voice actress who discovered that her voice was being used for AI voiceover on a short video platform without her authorization. Through investigation, Ms. Yin found that her voice was recorded during cooperation with a cultural media company and then provided to a software company without her consent, ultimately purchased and commercialized by a tech company.

The tech company used AI technology to clone Ms. Yin's voice for a text-to-speech product called "Mo Xiaoxuan," which they sold on their software platform. Ms. Yin believed this action infringed on her voice rights and sued the relevant companies, demanding they cease the infringement, apologize, and compensate her for economic and emotional damages totaling 600,000 yuan. This case not only highlights the conflict between technology and law but also raises a deep reflection on the ethics of AI technology application. The development of voice cloning technology has brought convenience to areas such as speech synthesis and personalized services, but it must be cautiously applied within a legal framework to respect and protect individuals' personality rights.

A key focus of the case is the issue of authorization. The defendants argued that the AI-generated voice lacked identifiability and should not be protected under voice rights. The plaintiff contended that even if the defendants had copyright authorization, it did not equate to authorization of personality rights. The court ruled that if the public can associate the voice with a specific individual based on its timbre, tone, and speaking style, the voice is identifiable and should be protected. The court ultimately determined that the cultural media company and software company used the plaintiff's voice without permission, constituting infringement.

According to Article 1023 of the Civil Code of the People's Republic of China, the protection of a natural person's voice rights follows the relevant provisions of portrait rights. Voice rights are part of personality rights, with the prerequisite for protection being "identifiability."

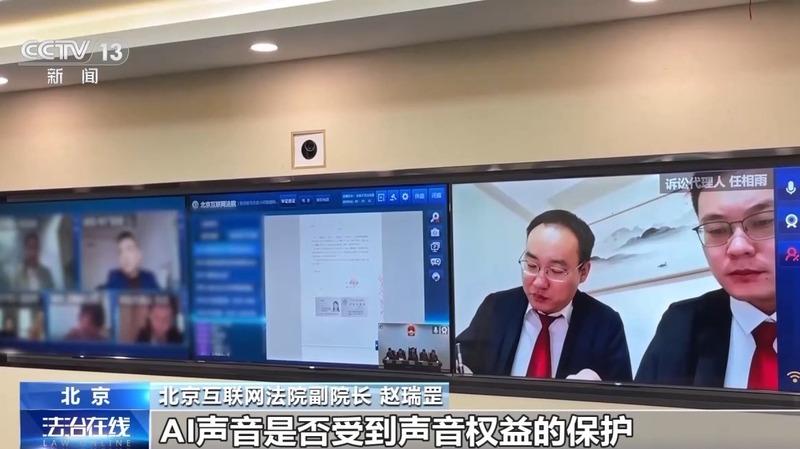

Beijing Internet Court's Vice President Zhao Ruigang noted that the iconic nature of a voice is very clear and can be associated with a person, thus voice rights are a new type of iconic personality right added to the Civil Code. Zhu Wei, Deputy Director of the Communication Law Research Center at China University of Political Science and Law, pointed out that voice rights are protected by referencing portrait rights, with the right to recognize a specific person through their voice belonging to specific personality rights. Experts believe that obtaining the legitimate authorization of the rights holder is key to determining voice rights infringement.

On April 23, 2024, the Beijing Internet Court ruled that the defendant tech company and software company should apologize to the plaintiff, and the cultural media company and software company should compensate the plaintiff 250,000 yuan in economic damages. The court's judgment reflects the importance of protecting voice rights and sets legal requirements for the commercial application of voice cloning technology.

Where Is an Individual's Voice Stolen?

The development of voice cloning technology has brought revolutionary changes to fields such as speech synthesis and personalized services. However, the dual-edged nature of technology has also led to an increase in scams based on voice cloning. Criminals use voice cloning for fraud in various ways, becoming increasingly rampant.

Cloning anyone's voice based on AI technology is very easy. It only requires extracting voice samples from public channels, sometimes needing just a few seconds or even seconds of samples, to create highly realistic voice clones. These voice samples can not only mimic a person's tone and intonation but also replicate their language habits and expression styles, making it difficult for recipients to doubt their authenticity.

Before implementing new types of telecom network fraud using voice cloning, fraudsters first collect target information, including images, contact details, home addresses, work information, and lifestyle details. The Dingxiang Defense Cloud Business Security Intelligence Center believes that there are various channels through which this information can be leaked:

-

Social Media: As social media has become an indispensable part of people's daily lives, avoiding the oversharing of sensitive information on social media has become a repository for fraudsters to gather scam material.

-

Network Data Breaches: Large-scale network data breaches can lead to the leakage of personal information, including photos, videos, and voices. Fraudsters can acquire these leaked data on dark web platforms to carry out fraudulent activities.

-

Phishing and Malware: Fraudsters send phishing emails or use malware to obtain victims' personal information, including photos and videos. Once they successfully gain access to the victim's device, they can further acquire more personal data. For instance, on February 15, 2024, the foreign security company Group-IB announced the discovery of malicious software named "GoldPickaxe." This malware's iOS version deceived users into face recognition and submitting ID documents, then conducted "AI face-swapping" fraud based on the user's facial information.

-

Public Events and On-Site Activities: At public places or specific events such as conferences, exhibitions, and social activities, fraudsters might collect victims' photos, voices, and videos.

In the face of voice cloning challenges, technical experts and companies need to adopt multi-layered defense measures: on one hand, they need to effectively detect and identify fake voices; platforms need to strengthen the identification of voice cloning; on the other hand, prevent the use and spread of voice cloning, verifying the behavior and identity of users; thereby effectively reducing fraudulent actions by attackers. Particularly, it is important to avoid sharing personal photos, voices, and videos on social media, and reduce the exposure of personal accounts, family, work, and other private information to lower the risk of identity forgery. Once discovering fake content of voice cloning, it should be reported immediately to social media administrators and law enforcement to take measures to delete and trace the source.