Recently, Mr. Ma from Guyuan City, Ningxia, reported to local police that he had been a victim of telecom fraud, losing 15,000 yuan to a scammer pretending to be his cousin. This scam not only involved AI face-swapping technology but also exploited the victim's trust in his family members.

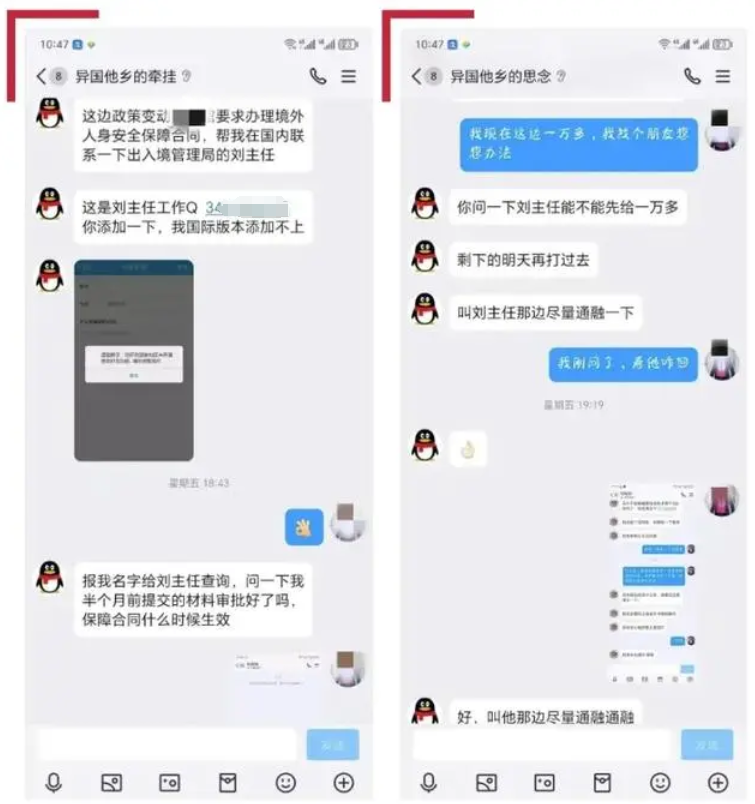

The incident began when Mr. Ma was browsing videos on a platform during his free time and suddenly received a message from his "cousin." The person claimed to be working in Africa and, due to the unstable local network, was unable to frequently contact family. The "cousin" then requested to be added as a friend, stating that he needed help with an urgent matter. Trusting his relative, Mr. Ma immediately accepted the friend request without hesitation.

After adding each other, the "cousin" made a video call. In the video, the "cousin" appeared normal, speaking familiarly, with a face that looked similar. He even mentioned some family matters, further dispelling Mr. Ma's doubts. During the chat, the "cousin" claimed that he urgently needed to sign an important contract with his company, but due to the unstable network in Africa, the transfer function could not be used properly. He asked Mr. Ma to temporarily help by covering the contract funds, promising to return the money once the network stabilized.

Based on the "verification" from the video call, Mr. Ma did not doubt the person and believed it was indeed his cousin. Trusting his family member, Mr. Ma quickly agreed to help. Following the "cousin's" instructions, he made three transfers to a designated bank account, totaling 15,000 yuan.

However, a few days later, when Mr. Ma attempted to contact his cousin again through WeChat to ask for repayment, he sensed something was wrong. His real cousin said that he had never borrowed money from Mr. Ma, nor was he working in Africa. It was at this point that Mr. Ma realized he had been scammed and immediately reported the case to the police.

The police's initial investigation revealed that this was a typical case of modern telecom fraud. The scammer had used advanced AI face-swapping technology to forge the image of the "cousin" in the video to deceive Mr. Ma. Additionally, the scammer utilized voice-cloning technology to imitate the "cousin's" voice and accent, making the scam even more convincing.

How to protect oneself

In such scams, fraudsters collect their target's voice patterns, speech, or facial information, and then use AI technology to create fake audio, video, or images, producing almost indistinguishable fake content. This increases the victim's trust and makes the scam highly deceptive. They then use excuses such as borrowing money, investments, or emergency rescues to lure the victim's friends or relatives into transferring money or providing sensitive information like bank account passwords.

This case serves as a reminder to the public that when faced with sudden financial requests from family or friends, even if there is a video "verification," one must remain cautious. When money is involved, it's important to confirm the identity through multiple channels. If necessary, direct phone calls or verification through other family members can help avoid falling into a trap.

1. Distinguishing during calls:

During video chats, ask the other person to press their nose or face to observe changes. If it's a real person's nose, pressing it will cause deformation. You can also ask the person to eat or drink to observe facial movements. Alternatively, request odd gestures or facial expressions, such as waving or making a difficult hand gesture, to verify authenticity. During waving, facial data may be disrupted, causing some shaking, flickering, or anomalies.

2. Replaying the video:

When receiving a suspicious video or call, stay calm, hang up under the pretext of a bad signal, and immediately call back through other means to verify the person’s identity, avoiding an immediate response to potential scam content.

3. Asking keyword questions:

Set a "safety word" or "challenge question" that only friends, family, or colleagues would know. Use it to verify the identity of the caller when receiving suspicious communications. If the person cannot provide the correct safety word or avoids the question, hang up immediately and use a known safe contact method to confirm their identity.。

How Platforms Can Prevent Fraud

It is recommended that enterprises adopt multiple technologies and methods. Moreover, promoting the positive application of AI technology and severely cracking down on criminal activities is the fundamental solution.

1. Identifying suspicious devices:

Dingxiang Device Fingerprinting uses the recording and comparison of device fingerprints to distinguish legitimate users from potential fraudulent behavior. It uniquely identifies each device and detects maliciously controlled devices like virtual machines, proxy servers, and emulators. It also analyzes abnormal behaviors such as multi-account logins, frequent IP address changes, and device attribute modifications that deviate from normal user habits, helping to track and identify fraudsters.

- Detecting suspicious account activity: For scenarios such as remote logins, device changes, phone number replacements, and sudden activity from dormant accounts, frequent verification needs to be enforced. Additionally, persistent identity verification during sessions is critical to ensure the user's identity remains consistent. Dingxiang's atbCAPTCHA quickly and accurately distinguishes whether the operator is human or machine, precisely identifying fraudulent activities and monitoring and intercepting abnormal behavior in real-time.

3. Preventing fake face-swapped videos:

Dingxiang's full-chain panoramic facial security threat detection solution uses multiple dimensions such as device environment, facial information, image forgery detection, user behavior, and interaction state for intelligent verification. It rapidly identifies over 30 types of malicious activities, including injection attacks, live body forgery, image forgery, camera hijacking, debugging risks, memory tampering, rooting/jailbreaking, malicious ROM, and emulators. Upon detecting fake videos, forged facial images, or abnormal interactions, it can automatically block operations. The system also allows flexible configuration of video verification intensity and user-friendliness, implementing dynamic mechanisms to strengthen verification for abnormal users while maintaining atbCAPTCHA for regular users.

4. Uncovering potential fraud threats:

Dingxiang’s Dinsight real-time risk control engine helps enterprises conduct risk assessments, anti-fraud analysis, and real-time monitoring, improving the efficiency and accuracy of risk control. Dinsight’s average daily processing speed is within 100 milliseconds and supports the configuration and accumulation of multi-source data. It leverages mature indicators, strategies, model expertise, and deep learning technology to achieve self-performance monitoring and self-iteration of risk control mechanisms. Paired with Dingxiang's Xintell intelligent model platform, the solution automatically optimizes security strategies for known risks, extracts potential risks from risk control logs and data, and allows one-click configuration to support risk control strategies in different scenarios. The solution standardizes complex processes such as data processing, feature derivation, and model building through deep learning, providing a one-stop modeling service from data processing to final model deployment.