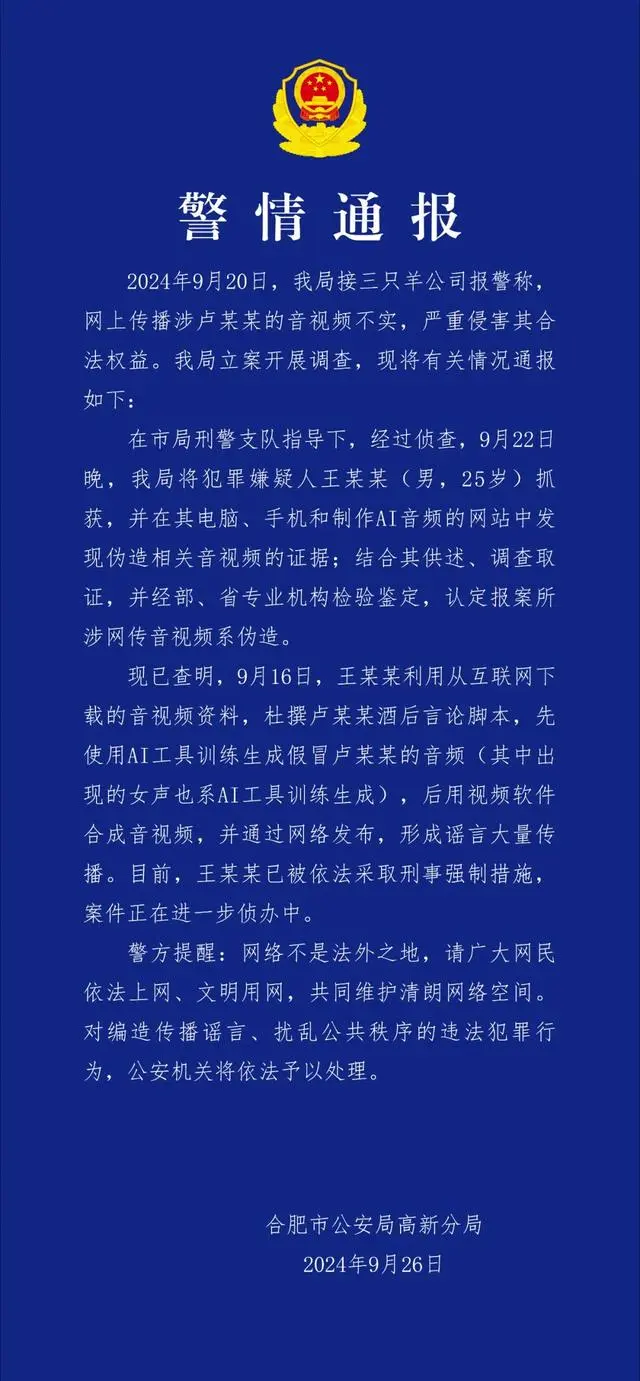

On September 26, the Gaoxin Branch of Hefei Public Security Bureau released a police briefing, stating that the "Three Sheep" company reported that the online spread of audio and video involving Lu Moumou was false, severely infringing on their legitimate rights.

Investigations revealed that on the evening of September 22, the suspect Wang Moumou used audio and video materials downloaded from the internet to fabricate a script of Lu Moumou’s alleged drunken remarks. Wang then trained AI tools to generate fake audio mimicking Lu Moumou. The female voice heard in the recordings was also generated using AI tools. Subsequently, Wang used video software to synthesize the audio and video, publishing them online, leading to widespread rumors.

Wang Moumou has been subjected to criminal compulsory measures, and the case is under further investigation.

Where was Lu Moumou’s Voice Stolen From?

Cloning anyone’s voice using AI technology has become incredibly easy. By extracting voice samples from public sources, even just a few seconds of a sample can be enough to produce highly convincing voice clones. These samples can mimic not only a person’s tone and inflection but also their speech habits and mannerisms, making it extremely difficult for recipients to detect any discrepancies in authenticity.

Dingxiang’s Cloud Business Security Intelligence Center suggests multiple avenues for such information leakage:

-

Social Media: Social media has become an integral part of people’s daily lives. Over-sharing sensitive information on these platforms has turned them into a repository for criminals seeking fraudulent materials.

-

Data Breaches: Large-scale data breaches may expose personal information, including photos, videos, and audio. Criminals can acquire this leaked data from dark web platforms to carry out fraudulent activities.

-

Phishing and Malware: Criminals often use phishing emails or malware to extract personal information, including photos and videos. Once they gain access to a victim's device, they can further obtain additional personal data.

-

Public Events and Live Gatherings: At public places like conferences, exhibitions, or social gatherings, criminals may collect photos, voices, and videos of their targets.

How Individuals Can Prevent Voice Cloning

Facing the challenge of AI voice cloning requires a multi-layered defense. On one hand, it’s crucial to detect and identify forged voices, with platforms needing to strengthen their capabilities to recognize voice cloning. On the other hand, preventing the misuse and spread of cloned voices through user behavior and identity verification is essential to reduce fraud by attackers effectively.

-

Reduce Sharing of Sensitive Information: Individuals should avoid sharing personal photos, voice, and video on social media to minimize the risk of identity theft. Limiting the public disclosure of personal accounts, family, and work-related private information reduces the chance of being targeted. If you come across “deepfake” content, immediately report it to social media administrators and law enforcement to have it removed and trace the source.

-

Platforms Strengthen Voice Cloning Detection: Social media and communication platforms must enhance their ability to detect voice cloning using advanced AI technology for authenticating voices, quickly identifying, and blocking suspicious content. Platforms should develop and apply AI tools for voice authentication, such as voiceprint recognition, to ensure the security of voice communication.

-

Identify Abnormal Operations Through Behavior Analysis: Platforms should analyze user behavior patterns and identity information to establish security warning mechanisms. This involves monitoring and restricting suspicious activities like abnormal logins or high-frequency messaging. By analyzing mouse movement patterns, typing styles, and other behavioral patterns, platforms can flag suspicious activities that deviate from normal usage. Additionally, extra identity and device verification, as well as large models capable of scanning vast amounts of data, can identify subtle inconsistencies that are usually undetectable by humans, helping uncover abnormal operations by attackers.

Dingxiang Device Fingerprinting can generate a unified and unique fingerprint for each device. It builds a multi-dimensional recognition strategy model based on device, environment, and behavior to detect risk devices like virtual machines, proxy servers, and simulators that may be maliciously manipulated. The system tracks and identifies fraudulent activities by analyzing abnormal behaviors, such as frequent IP address changes, multiple account logins, and alterations in device attributes, helping businesses manage risk across channels with a single ID.

Dingxiang atbCAPTCHA is based on AIGC technology and prevents AI brute force cracking, automated attacks, and phishing threats. It effectively blocks unauthorized access, account theft, and malicious operations, ensuring system stability. It integrates 13 verification methods and multiple defense strategies, with 4,380 risk strategies, 112 risk intelligence categories, covering 24 industries, and 118 risk types. Its accuracy rate is as high as 99.9%, quickly converting risk intelligence and reducing response time to under 60 seconds, further enhancing the convenience and efficiency of digital login services.

Dingxiang Dinsight’s real-time risk control engine assists businesses in risk assessment, anti-fraud analysis, and real-time monitoring, improving the efficiency and accuracy of risk management. Dinsight’s daily risk control strategy processing speed is under 100 milliseconds, supporting the integration and storage of multi-source data. It leverages mature indicators, strategies, model experience, and deep learning to achieve self-monitoring and iteration of risk control performance. Paired with the Xintell intelligent model platform, known risks are automatically optimized, and potential risks are identified through risk control logs and data mining. It offers one-click configuration for various scenarios, supporting risk control strategies. By standardizing the complex processes of data processing, feature derivation, model building, and deployment, Xintell provides an end-to-end modeling service.

- AI Voice Tools Should Enforce Anti-Counterfeiting Features: For instance, incorporating small disturbances, erratic noises, or fixed background rhythms allows listeners to distinguish between real and AI-generated voices. Additionally, hardware that records audio in AI voice synthesis tools should integrate built-in sensors to detect and measure human biological signals when speaking, such as heartbeat, lung movement, vocal cord vibration, as well as the movement of lips, jaws, and tongue. This data can be attached to the audio, providing listeners with verifiable information to distinguish between naturally recorded voices and AI-generated forgeries.