Fraud cases targeting the elderly are increasing. In the latest incidents, scammers used AI technology to mimic the voices and faces of relatives, successfully deceiving elderly victims into transferring money intended for living expenses and tuition.

Mr. Zhang, a septuagenarian, has been living alone since his wife passed away years ago. His son works abroad and rarely visits. One day, Mr. Zhang received a call from an unfamiliar number. The voice on the other end was unmistakably that of his son. "Dad, I’m in trouble overseas and need money to resolve the issue," the voice said, filled with urgency and tension. Concerned for his son, Mr. Zhang quickly decided to send his hard-earned savings to “help.” A few days later, during a call with his real son, Mr. Zhang discovered he had been scammed. The entire incident was orchestrated by fraudsters using AI voice-cloning technology to replicate his son’s voice.

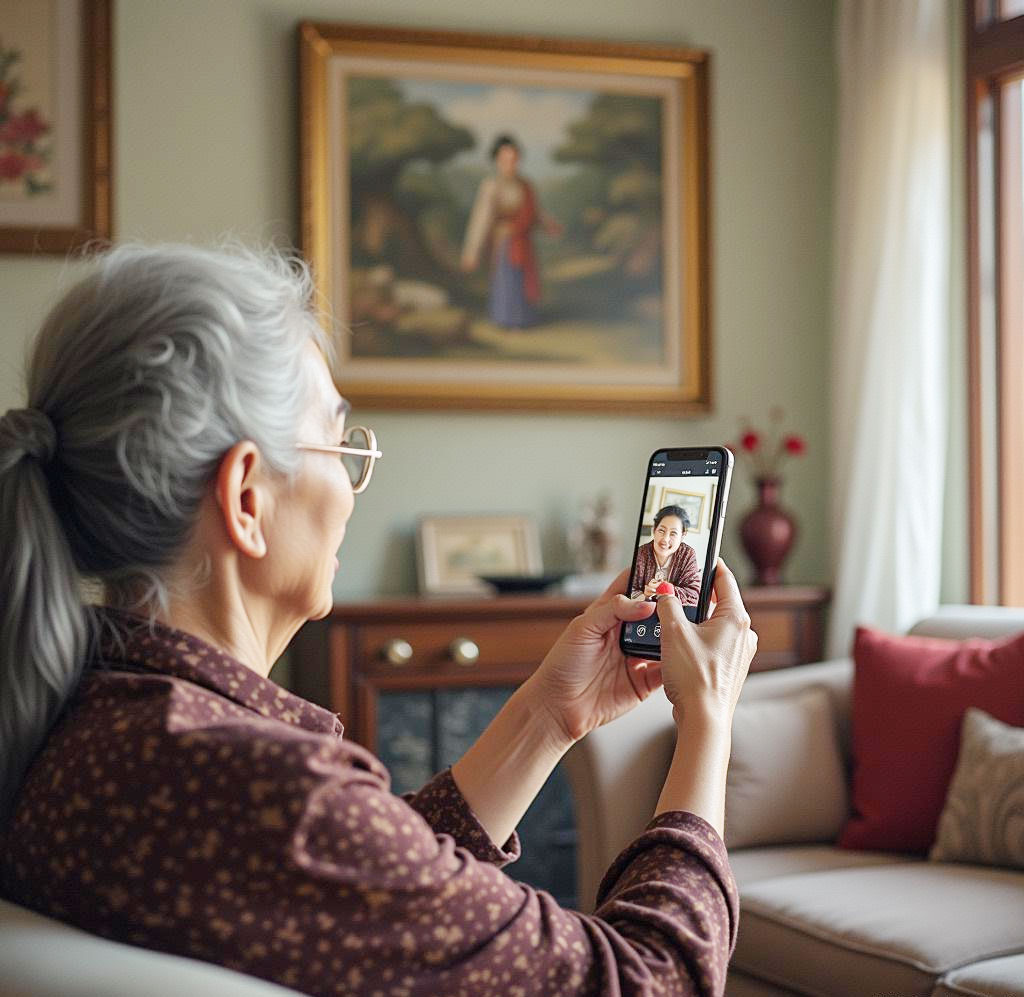

Another heartbreaking incident involved Mrs. Wang, whose daughter is studying abroad. The two often communicate via video calls. One day, Mrs. Wang received a video call request that displayed her daughter’s familiar face. At first, Mrs. Wang suspected nothing, as she was accustomed to such calls. During the conversation, however, the “daughter” claimed to be in urgent need of tuition and living expenses. Driven by maternal concern, Mrs. Wang quickly transferred the money. Later, she discovered that her real daughter had not initiated the call. The “daughter” on the screen was actually a scammer using AI face-swapping technology to superimpose her daughter’s face onto their own.

Unveiling the Fraudsters’ Techniques

-

Information Gathering

Scammers begin by collecting basic information about the elderly victim through social media, public records, or leaked databases. This includes names, addresses, contact details, and information about their relatives.

-

AI Voice and Face Cloning

Next, they use AI technology to clone voices or generate realistic facial replicas. In video call scams, fraudsters employ AI face-swapping tools to create highly convincing fake visuals, making the "relative" in the video appear flawless and believable. -

Creating Urgent Scenarios

Scammers craft scenarios designed to provoke anxiety, such as claiming to have been in an accident or facing an emergency abroad. These scenarios compel the elderly, who are emotionally attached to their relatives, to act quickly without questioning authenticity. -

Emotional Manipulation

By continuously increasing the sense of urgency and exploiting the elderly victim's emotional dependency on their relatives, fraudsters push victims to make hasty decisions—often transferring money without sufficient verification.

Why Are Elderly People Prone to Fraud?

-

Emotional Dependence on Family

Many elderly individuals experience declining physical abilities and shrinking social circles, intensifying feelings of loneliness. This emotional void often creates a strong desire and dependence on familial bonds. When scammers exploit these familial ties, the elderly are more likely to lower their guard and trust the "relative" on the call or video.

-

Lack of Awareness About AI Technology

Most elderly people are unfamiliar with advanced AI technologies like voice cloning and face-swapping, which have reached highly convincing levels of realism. Due to limited tech literacy, they struggle to distinguish between genuine and fraudulent interactions, especially when scammers create seamless impersonations of their loved ones. -

Emotion-Driven Decision Making

In urgent scenarios fabricated by scammers, the elderly often let their emotions override rational judgment. Under pressure, they may rush to decisions without thoroughly verifying the information, leading to financial losses.

How to Effectively Prevent Such Scams

In these sophisticated telecom frauds leveraging AI-generated audio and video, it is challenging to detect anomalies through direct observation. Even after verifying identity via calls or video, the elderly should maintain vigilance in response to urgent financial requests. Multiple identity verification methods should be employed to avoid falling victim to such scams. Dingxiang Defense Cloud’s Security Intelligence Center recommends the following preventive measures:

-

Redialing Calls or Videos

Upon receiving a suspicious call or video, stay calm and avoid responding immediately to any requests. Use an excuse like poor signal to hang up, then reconnect via known, secure contact methods to confirm the requestor’s identity, bypassing the scammer’s manipulation. -

Setting Up Family Verification Mechanisms

Prearrange a “safe word” or “challenge question” known only to you and your family. When suspicious calls arise, ask for the keyword. Failure to answer correctly or evasive behavior can be a warning sign. Hang up immediately and verify the identity through secure channels. -

Consulting Trusted Relatives

For emergency monetary requests, consult with other relatives or trusted contacts to see if they are aware of the situation. This can help alleviate pressure and provide a clearer perspective, reducing the risk of deception. -

Reporting to Authorities

Contact local authorities by dialing 110 to report the fraud in detail.

These measures not only help individuals combat AI-driven fraud but also remind everyone to stay calm and vigilant during unexpected financial requests, reducing the likelihood of falling into scammers’ traps.

Platforms Should Strengthen Prevention

Fraudsters are combining technology and psychological manipulation to control victims with precision. To combat AI-based fraud, collective societal efforts are essential, using technical, educational, and emotional support to create a robust barrier against scams. Particularly, short-video platforms must adopt multiple techniques to identify fraudulent accounts and detect manipulated audio and video content at the source.

-

Detecting Anomalous Devices

Dingxiang Device Fingerprinting can distinguish legitimate users from potential fraudsters by analyzing unique device identifiers. It detects malicious devices such as virtual machines, proxies, and emulators. By monitoring behaviors like multi-account logins, frequent IP changes, and abnormal device activity, it helps trace and identify fraud. -

Identifying Abnormal Account Behavior

Accounts showing unusual patterns like remote logins, device swaps, or sudden activity after dormancy should undergo frequent verification. Continuous session-based identity checks are critical. Dingxiang atbCAPTCHA quickly differentiates between humans and bots, identifying fraudulent activity in real time and blocking it effectively.

-

Preventing Fake Videos Using Face-Swapping

Dingxiang's Full-Link Panorama Face Security Solution employs intelligent verification through device environment analysis, facial recognition, image authenticity checks, user behavior monitoring, and interaction states. It identifies over 30 types of malicious activities, such as live spoofing, image forgeries, camera hijacking, and system modifications. Suspicious videos or interactions can be blocked instantly, with flexible verification configurations balancing user experience and fraud detection. -

Uncovering Potential Fraud Risks

Dingxiang Dinsight supports risk assessment, fraud analysis, and real-time monitoring for enterprises, enhancing risk control efficiency and accuracy. With processing speeds under 100 milliseconds, it integrates multi-source data and applies advanced strategies and models for fraud detection. Paired with the Xintell Intelligent Model Platform, it optimizes security strategies for known risks and uncovers hidden threats, offering standardized data processing, feature extraction, and one-click fraud control for various scenarios.