According to the "Fifth Survey Report on the Living Conditions of Elderly People in Urban and Rural China," as of 2021:

- 36.6% of elderly individuals can use smartphones.

- 40.4% cannot use smartphones.

- 23.0% do not own a smartphone.

Among those who can use smartphones: - 74.7% use mobile chatting apps.

- 18.1% use mobile payments.

- 9.6% book medical appointments online.

- 7.1% use ride-hailing apps.

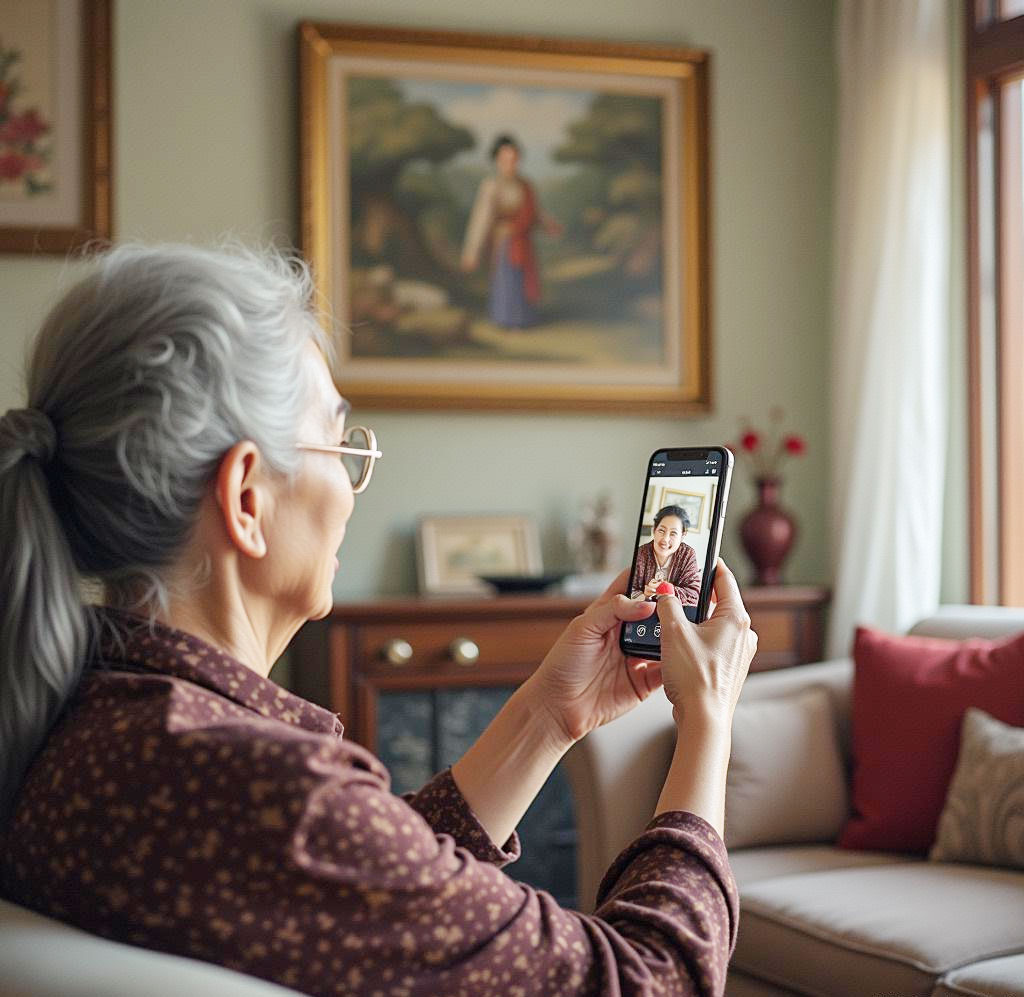

Elderly Frequently Fall Victim to AI Fraud Impersonating Relatives

AI Fake Relative Claiming Car Accident

In March 2024, Hanyang District Police in Wuhan received reports from a bank where two elderly customers requested early withdrawals from fixed-term deposits, raising suspicions of telecom fraud. The two seniors, unrelated and handling business at the same bank, each received phone calls allegedly from their grandsons.

One woman was told her grandson had injured someone in a conflict and was at the police station resolving the issue. The other man was told his grandson was in a car accident and needed immediate compensation for settlement. Both firmly believed the voices sounded exactly like their grandsons. After confirming with family members, the police discovered neither grandson faced such situations. This new type of fraud uses AI technology to clone a relative’s voice, fabricating realistic emergency scenarios.

AI Fake Relative Asking for Tuition Fees

A septuagenarian, Mr. Zhang, received a call from someone claiming to be his son abroad, saying, “Dad, I’m in trouble overseas and need money urgently.” The voice sounded identical to his son’s, prompting Mr. Zhang to immediately wire his savings. Days later, when his actual son called, it was revealed that the earlier call was a scam using AI voice synthesis.

Another victim, Mrs. Wang, received a video call seemingly from her daughter, studying abroad. The video displayed her daughter’s face, claiming she needed urgent funds for tuition and living expenses. Believing her “daughter’s” plea, Mrs. Wang transferred the money, only to later discover that her real daughter had not initiated the video call. The scammer had used AI face-swapping technology to replicate her daughter’s face, orchestrating a false familial crisis.

Why Scammers Succeed

-

Information Gathering

Fraudsters collect personal data, including names, addresses, contacts, and family details, through social media, public databases, or leaked records. -

AI Voice and Face Simulation

Fraudsters use AI to clone voices and generate realistic appearances in video calls, making impersonation highly convincing. -

Fabricating Urgent Scenarios

They create scenarios designed to evoke anxiety and urgency, such as claiming accidents or crises that require immediate financial assistance. -

Exploiting Emotional Vulnerability

Many elderly individuals, experiencing reduced social circles and feelings of loneliness, have strong emotional dependence on family members. When faced with seemingly authentic appeals from relatives, they lower their guard, especially in emergencies. Emotional stress often impairs judgment, causing them to act hastily without verifying the situation.

Additionally, many seniors lack awareness of advanced AI technologies like face-swapping or voice synthesis. Unfamiliarity with these technologies leaves them unable to discern fraud in situations where scammers replicate familiar voices or faces seamlessly.

How to Effectively Prevent Such Scams?

The white paper on financial AIGC audio and video anti-fraud(Click here to download the white paper for free], jointly released by the Bank of Communications and Dingxiang, provides a detailed overview of the characteristics, attack processes, and prevention measures for fraud involving AI-based "face-swapping" and "voice cloning."

In this new type of telecom fraud, the realistic audio and video generated by AI make it difficult for victims to detect abnormalities through visual or auditory means. Therefore, even after verifying the other party's identity through phone calls or video chats, elderly individuals must remain highly vigilant when faced with urgent financial requests. In transactions involving money, multiple methods must be used to confirm the other party's identity to avoid making hasty decisions that result in falling for a scam. The Dingxiang Defense Cloud Business Security Intelligence Center recommends the following effective measures for the elderly to prevent such fraud:

1. Redial Video or Phone Calls

- Action: When receiving a suspicious call or video, stay calm and do not immediately respond to any requests. You can pretend there is a poor signal, hang up, and then call back using another known and secure contact method to confirm whether the request genuinely came from your relative or friend.

- Goal: Avoid directly responding to fraudulent information.

2. Set Up a "Family Verification Mechanism"

- Action: Predefine a "safety word" or "challenge question" known only to you and your close relatives or friends for identity verification. If you receive a suspicious call, ask the other party to provide this keyword.

- Warning: Inability to answer correctly or attempts to evade the question should be taken as warning signs of fraud. Hang up immediately and verify the identity through secure means.

3. Consult Trusted Relatives or Friends

- Action: When encountering urgent financial requests, first check with other family members or trusted people to confirm whether they are aware of the situation or have also received similar calls.

- Benefit: Seeking advice from trusted individuals can help alleviate pressure and avoid being misled by fraudulent information.

4. Report to the Police

- Action: Call the local police hotline (110) to report the fraudulent situation in detail.

- Purpose: Help the police quickly identify and take action against fraudsters to prevent others from becoming victims.

These preventive measures not only help individuals resist AI-related scams but also remind everyone to stay calm and vigilant when dealing with unexpected financial demands to avoid falling into criminal traps.

How Platforms Can Strengthen Prevention

Fraudsters combine advanced technology with psychological manipulation to target victims precisely. To counter AI forgery scams, efforts from all sectors of society are necessary. These efforts include technical defenses, public education, and emotional support to build a robust defense wall against fraud. Especially, short-video platforms must adopt multiple technologies and measures to detect and block accounts operated by fraudsters at their source and effectively identify forged celebrity videos.

1. Detecting Abnormal Devices

- Technology: Dingxiang’s device fingerprinting technology records and compares device behavior, identifying malicious devices such as virtual machines, proxy servers, and emulators.

- Functionality: It analyzes whether devices exhibit abnormal behavior, such as frequent account logins, frequent IP address changes, or device attribute modifications inconsistent with user habits, helping trace and identify fraudsters’ activities.

2. Identifying Abnormal Account Activity

- Monitoring: Focus on scenarios such as accounts logging in from different locations, changing devices, or reactivating dormant accounts.

- Solution: Use Dingxiang's atbCAPTCHA technology to verify users during high-risk operations continuously. This ensures persistent identity verification throughout sessions, helping to quickly differentiate between legitimate users and bots and intercept fraudulent behavior in real time.

3. Preventing Fake Face-Swapping Videos

- Detection Solution: Dingxiang's full-chain, panoramic facial security threat detection system verifies devices, facial information, image forgery, user behavior, and interaction status across multiple dimensions.

- Key Capabilities: It identifies over 30 types of malicious attacks, including injection attacks, liveness forgery, image forgery, camera hijacking, debugging risks, memory tampering, rooting/jailbreaking, malicious ROMs, and emulators. The system can automatically block operations involving forged videos, fake facial images, or abnormal interactive behavior. It also allows flexible configuration of video verification intensity, providing a dynamic mechanism to adjust authentication levels based on user risk.

4. Detecting Potential Fraud Threats

- Risk Assessment: Dingxiang's Dinsight platform helps businesses perform risk evaluation, anti-fraud analysis, and real-time monitoring, improving the efficiency and accuracy of risk control.

- Key Features: The average processing speed of Dinsight's daily risk control strategies is under 100 milliseconds. It supports multi-source data integration and self-iteration mechanisms using mature indicators, strategies, models, and deep learning techniques. The Xintell intelligent modeling platform, integrated with Dinsight, provides a standardized solution for data processing, feature engineering, model development, and deployment. It enhances the automation of fraud detection strategies by mining risk control logs and identifying potential risks, optimizing security strategies for known risks in one click.