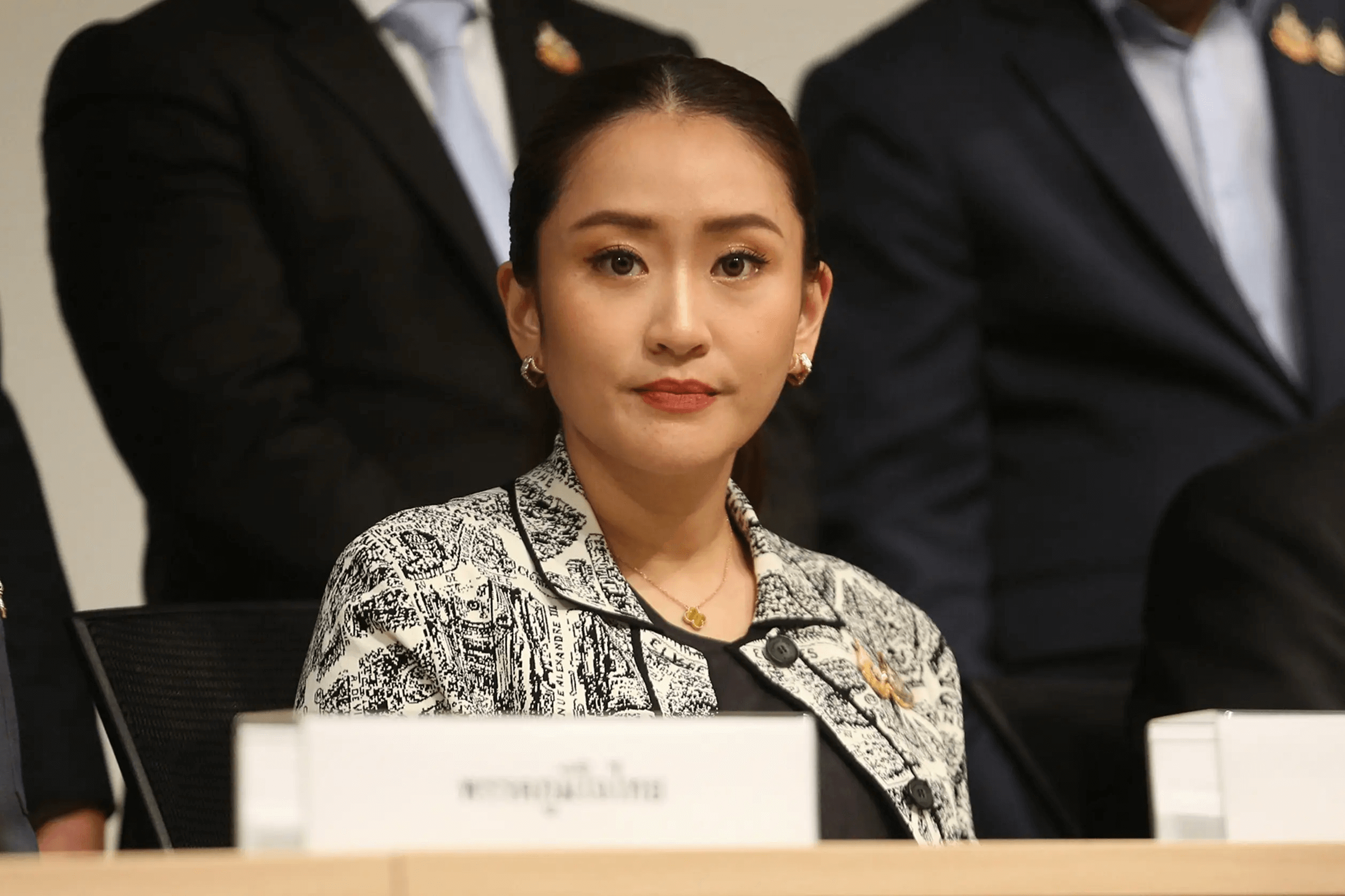

On the 15th, Thai Prime Minister Petongtarn revealed to the media that she had also fallen victim to a scam where the perpetrators used AI technology to simulate the voice of a leader from an ASEAN country. The scammers, posing as representatives of a "nation," tried to deceive her into making a donation, and she almost fell for the scam. She shared this incident to raise public awareness about telecommunication fraud.

In her media interview, Petongtarn recalled, "The voice was so similar and very clear; I had heard this voice before." The scammers then asked her to donate, claiming that "Thailand is the only ASEAN country that hasn't donated." This raised her suspicions. It wasn’t until they sent a bank account that didn’t belong to the country they claimed to represent that she realized it was a scam.

Petongtarn has instructed the Deputy Prime Minister and Minister of Digital Economy and Society, Phatthana, to handle the case and reminded the public to stay vigilant about telecom fraud.

How to Protect Against Impersonation Scams Using AI?

The voices and appearances of political figures are highly public and part of their daily life. As a result, their voices are often recorded and widely disseminated. These audio recordings are easily spread, giving scammers the opportunity to gather enough audio material for synthetic voice generation.

To protect against AI impersonation scams involving political figures or celebrities, Dingxiang’s Defense Cloud Business Security Intelligence Center advises the public to remain alert and adopt multi-layered verification measures.

-

Call Back to Verify Identity

Hang up the phone and reconnect using a known, reliable contact method (e.g., a family member’s number saved in your phone or a contact provided on an official website). Do not call back using the number provided by the caller, as scammers may use spoofed caller IDs. -

Contact the Person or Their Team Through Other Means

If you receive a call claiming to be from a political figure or celebrity, try contacting the relevant agency or team directly to confirm the authenticity of the request, preventing a hasty decision under the influence of scammers. -

Consult Trusted Family or Friends

If you receive a call or message asking you to donate or transfer money, consult trusted family or friends to see if they’ve received similar requests or if there are any indications of similar events. If you're unsure, ask for their help in verifying the situation. -

Increase Awareness of AI Forgery Technology

When receiving a call, even if the voice seems familiar, pay attention to inconsistencies in tone, fluency, or emotional expression. AI-generated voices may lack some details, such as variations in tone or emotional authenticity. If you notice any inconsistencies, remain skeptical and cautious.