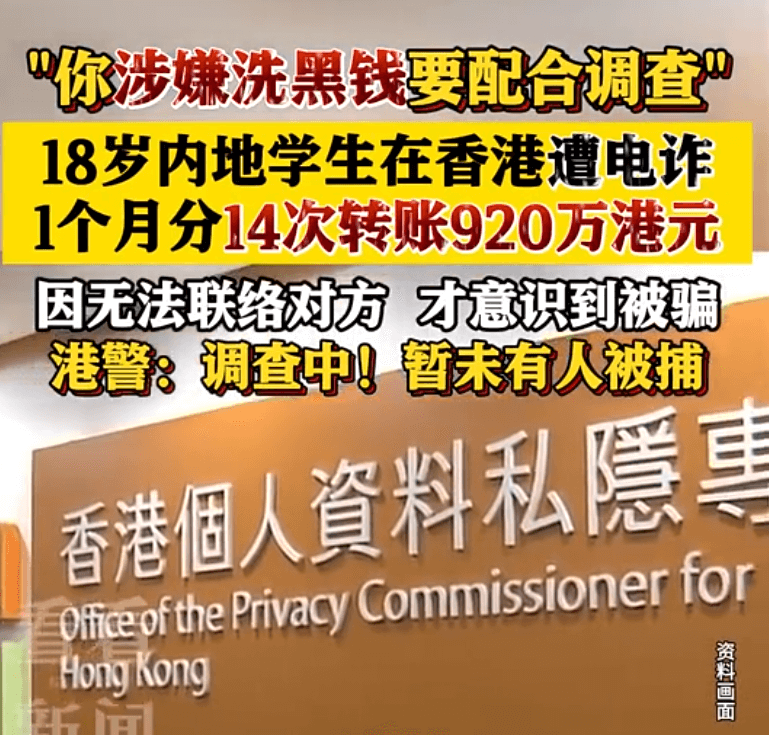

In November 2024, an 18-year-old mainland female student studying at the University of Hong Kong received a call from someone claiming to be a "mainland law enforcement officer," accusing her of being involved in a money laundering case and demanding she transfer approximately HKD 9.2 million to multiple bank accounts for investigation. Later, the student realized she had been scammed after she could not contact the caller.

The student, surnamed Hong, came to Hong Kong from mainland China in September 2024. On November 27 of the same year, she received a call from someone claiming to be a telecommunications company employee, accusing her of being involved in a money laundering case, and the call was then transferred to someone claiming to be a "mainland law enforcement officer." The fraudster instructed her to download an app for monitoring, and from December 2, 2024, to January 4, 2025, she transferred a total of approximately HKD 9.2 million to 3-5 local accounts provided by the fraudster, claiming it was for investigation. After the fraudster lost contact with her, she realized it was a scam and reported it to the police on the 9th of the same month. The Criminal Investigation Team of the Hong Kong Western District is investigating the case and has issued arrest warrants for five suspects.

This incident is not an isolated case. Emails sent to students by the University of Hong Kong show that more than 60 students have become victims of fraud in recent months, with a total loss of over HKD 60 million. The majority of the victims were first-year mainland students, and the scams occurred mainly between September and October last year, when they were unfamiliar with Hong Kong's environment and culture, making them easy targets. According to police statistics, from January to November 2024, Hong Kong reported 40,470 fraud cases, an increase of 8.7% year-on-year, with a total amount of HKD 8.52 billion involved. Among them, phone fraud cases increased by 172.8%, reaching 5,168 cases. From September 2024 to November 2024, the Hong Kong Island district recorded 55 cases of "imposter official" scams involving "Mainland students," with total losses amounting to HKD 39 million.

Analysis of Reasons Why 18-Year-Old Students Are Easily Scammed

There are multiple reasons why mainland university students are vulnerable to scams. These include personal experiences, psychological vulnerability, cultural differences, inadequate anti-fraud education, and the increasing complexity of scam tactics.

-

Unfamiliarity with the local environment. For many mainland students studying in Hong Kong, it is a completely new environment for both living and studying. The unfamiliarity with local culture, legal systems, and social norms increases their vulnerability when facing external threats. When fraudsters impersonate "law enforcement officers," it becomes easier for students to trust them and follow their instructions.

-

Lack of social experience. Many mainland students, although academically successful, lack independent living experience and the ability to deal with complex situations. Having not encountered real-life anti-fraud information or the various scams, they find it difficult to remain calm when receiving unexpected calls, making them more susceptible to scammers' words. This lack of social experience makes them easy targets for scammers, who can easily manipulate them.

-

High-fidelity scam techniques. Fraudsters not only impersonate "law enforcement officers" using phone voices but also use the credibility of institutions like "law" and "banks" to create fear in the victims. Scammers often force victims to react quickly by creating an "urgent situation," manipulating the victims' emotions, making it hard for them to think rationally. Victims believe the fraudsters' "official" identities without suspicion, and in the face of the pressure and urgency, they fail to verify the information and are scammed.

-

Lack of anti-fraud education and information. Although the University of Hong Kong has provided some anti-fraud publicity, many victims have not been exposed to relevant anti-fraud knowledge. Especially at the beginning of the school year, anti-fraud education was insufficient. Mainland students often focus on their academic performance and course selection rather than paying attention to anti-fraud knowledge and life skills. Given the increasing variety of scams, simple verbal reminders and sporadic publicity are clearly insufficient to capture students' attention. Many new students lack awareness of security issues related to social environments and networks, making them more vulnerable to scams.

To address these problems, society and educational institutions need to pay more attention to cultivating students' security awareness, help students improve their ability to identify fraud, and provide adequate safety education.

How to Effectively Prevent Phone Scams?

In this new type of online telecom fraud, scammers use AI-generated realistic audio and video, making it difficult for victims to detect abnormalities through intuition. Therefore, even when verifying the identity of the other party through phone or video, it is crucial to confirm their identity using multiple methods to avoid making rushed decisions and falling for scams. Dingxiang Defense Cloud Business Security Intelligence Center advises the public to stay alert and adopt multi-layered verification measures.

-

Call back to verify identity. Hang up the incoming call and contact the other party through known and reliable contact information (such as phone numbers stored in your phone or official contact information provided on official websites). Do not contact them again using the number they provide, as scammers may use fake caller ID numbers.

-

Consult teachers, classmates, or friends. If you receive a call or message asking you to donate money or transfer funds, it is advisable to check with friends and family to see if they received similar requests or if similar incidents have occurred. If you cannot determine this yourself, ask for help in verifying.

-

Increase awareness of AI forgery techniques. When receiving a call, even if the voice sounds familiar, if the tone or fluency of language, emotional expression, etc., are inconsistent, you should immediately be suspicious. AI-generated voices may lack details such as tone fluctuations or emotional authenticity.

-

Call the police for help. Dial the local emergency number to report the fraud to the police in detail.

These preventive measures will not only help individuals defend against AI fraud threats but also remind everyone to remain calm and vigilant when faced with sudden financial requests, avoiding falling into the trap of criminals.

Platforms Should Strengthen Security Warnings

Fraudsters are using a combination of technology and psychology to manipulate victims. In the face of AI-generated fraud, society must work together to build strong defenses through technology, education, and emotional support to prevent more people from being deceived. In particular, short video platforms need to adopt multiple technologies and methods to identify fraudster accounts and effectively detect fake celebrity videos.

-

Identify abnormal devices. Dingxiang Device Fingerprinting can distinguish between legitimate users and potential fraud by recording and comparing device fingerprints. This technology uniquely identifies each device and detects maliciously controlled devices such as virtual machines, proxy servers, and simulators. It analyzes whether the device has unusual behaviors, such as multi-account logins, frequent IP address changes, or device attribute changes, helping to track and identify fraudulent activities.

-

Identify abnormal account activities. Remote logins, device changes, phone number changes, dormant accounts suddenly becoming active, etc., require frequent verification; in addition, continuous identity verification during a session is crucial to ensure the user's identity remains consistent throughout the session. Dingxiang atbCAPTCHA can quickly and accurately differentiate between human and machine operators, precisely identifying fraudulent behaviors and monitoring abnormal activities in real time.

-

Prevent fake face-swapping videos. Dingxiang’s full-link panoramic face security threat perception solution intelligently verifies multiple dimensions of information, including device environment, facial data, image authentication, user behavior, and interaction status. It quickly identifies injection attacks, live-face forgery, image forgery, camera hijacking, debugging risks, memory tampering, Root/Jailbreak, malicious ROM, simulators, and over 30 types of malicious attacks. Once fake videos or fraudulent faces are detected, it can automatically block the operation. Additionally, it can dynamically adjust video verification strength and user-friendly settings, strengthening verification for abnormal users and implementing a dynamic mechanism.

-

Dig into potential fraud threats. Dingxiang Dinsight helps enterprises with risk assessments, anti-fraud analysis, and real-time monitoring to improve efficiency and accuracy in risk control. The average processing time of Dinsight’s daily risk control strategies is under 100 milliseconds. It supports the integration of multiple data sources and can automatically optimize security strategies for known risks. Based on risk logs and data mining, it can identify potential risks, configure different scenarios, and support risk control strategies.

How Platforms Can Strengthen Prevention?

Fraudsters are using a combination of technology and psychology to precisely manipulate their victims. In the face of AI-driven scams, society must work together through technological means, education, and emotional support to build a protective wall and prevent more people from falling victim. Short video platforms, in particular, need to adopt multiple technical measures to identify fraudulent accounts at the source.

-

Identify Abnormal Devices

Dingxiang Device Fingerprinting records and compares devices to distinguish between legitimate users and potential fraudulent behavior. This technology uniquely identifies and recognizes each device, identifying maliciously controlled devices such as virtual machines, proxy servers, and simulators. It analyzes whether the device shows abnormal or inconsistent behaviors, such as logging in with multiple accounts, frequently changing IP addresses, or altering device attributes, helping to track and identify fraudulent activities. -

Identify Accounts with Abnormal Operations

Continuous identity verification during the session is crucial to ensure the user’s identity remains consistent throughout the usage. Dingxiang atbCAPTCHA quickly and accurately distinguishes whether the operator is a human or a machine, precisely identifying fraudulent activities, monitoring in real time, and intercepting abnormal behavior. -

Prevent Fake Videos from Face-Swapping

Dingxiang's full-link panoramic face security threat perception solution conducts intelligent verification across multiple dimensions, including device environment, facial information, image authentication, user behavior, and interaction status. It quickly identifies over 30 types of malicious attacks, such as injection attacks, live-body forgery, image forgery, camera hijacking, debugging risks, memory tampering, Root/Jailbreak, malicious ROM, simulators, etc. Once fake videos, fraudulent facial images, or abnormal interactions are detected, the system automatically blocks the operation. It also allows flexible configuration of video verification strength and user-friendliness, enabling a dynamic mechanism that strengthens verification for abnormal users while maintaining regular atbCAPTCHA for normal users. -

Identify Potential Fraud Threats

Dingxiang Dinsight helps enterprises with risk assessments, anti-fraud analysis, and real-time monitoring, improving the efficiency and accuracy of risk control. The average processing speed of Dinsight’s daily risk control strategies is under 100 milliseconds, supporting configurable multi-party data access and accumulation. Based on mature indicators, strategies, models, and deep learning technologies, it enables self-monitoring and self-iteration of risk control performance. Dinsight, paired with the Xintell smart model platform, optimizes security strategies for known risks, analyzes potential risks through data mining, and configures risk control strategies for different scenarios with one click. Using association networks and deep learning technologies, the platform standardizes complex data processing, mining, and machine learning processes, offering a one-stop modeling service from data processing and feature derivation to model construction and deployment.